Harnessing AI Potential Without the High Costs

In the rapidly evolving field of artificial intelligence, the pursuit of advancing AI capabilities often comes with significant financial and environmental costs. Traditional training methods require substantial computational resources, leading to high expenses and a considerable carbon footprint. However, a groundbreaking study from the University of Washington, Seattle’s Allen Institute for AI (Ai2), and Stanford University offers a promising solution. By introducing a technique that allows AI models to deliberate longer before responding, researchers have significantly enhanced accuracy without the hefty price tag. This innovative approach not only addresses the financial constraints but also opens new avenues for more sustainable AI development.

The Power of Test-Time Scaling

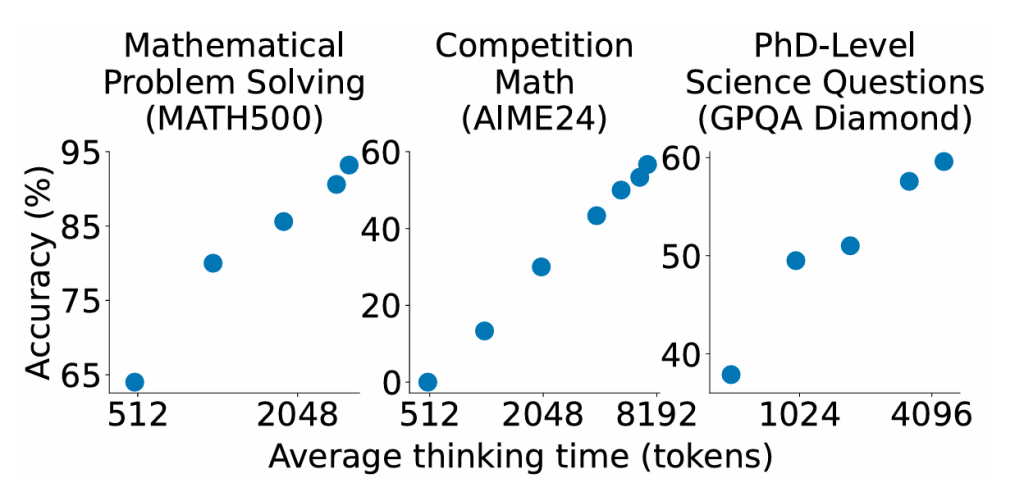

At the core of this advancement lies a simple yet ingenious method called "test-time scaling." Essentially, this technique gives AI models more time to ‘think’ before providing an answer. Imagine it as allowing a student extra time to revise their work—this pause often leads to better, more accurate responses. By encouraging the AI to process information more thoroughly, the model can review and refine its answers, reducing errors and improving overall performance. This method is both efficient and cost-effective, making it an attractive alternative to resource-intensive training practices.

A Broader Movement Towards Efficient AI

This research is part of a larger trend in the AI community, exemplified by initiatives like DeepSeek, which seeks to enhance AI performance while minimizing costs. The study highlights that training the s1 reasoning model required less than $50 in cloud computing credits, demonstrating a stark contrast to the typical expenses associated with AI development. This shift towards efficiency underscores a growing emphasis on accessibility and sustainability in AI advancement.

Democratizing AI Through Open Source

In a commendable move, the researchers have made the s1 model, along with its data and code, openly available on GitHub. This decision embodies the spirit of collaboration and democratization in AI research. By providing free access, the team encourages broader participation and innovation, allowing developers worldwide to build upon their work. This philosophy not only accelerates progress but also ensures that the benefits of AI advancements are shared widely.

A Collaborative Research Effort

The success of this project is a testament to the power of collaboration. The research team, comprising experts from Stanford University, the University of Washington, and Ai2, brings a wealth of expertise in areas such as natural language processing, machine learning, and AI reasoning. Their diverse backgrounds and collective knowledge have been instrumental in developing this innovative technique, setting a precedent for future interdisciplinary efforts.

The Future of AI: Efficient, Accessible, and Sustainable

The implications of this research extend beyond immediate advancements, pointing to a future where AI development is more efficient, accessible, and environmentally sustainable. As the AI community continues to embrace collaborative and cost-effective methods, we can expect significant strides in both capability and affordability. This study not only paves the way for more sophisticated AI models but also challenges the status quo, encouraging a shift towards more mindful and sustainable innovation. For those interested, the research paper and s1 model are available on GitHub, inviting everyone to explore and contribute to this exciting new chapter in AI.